Agent-Based Simulation Concepts

For simulations to be meaningful, it is necessary to define models for both virtual agents and their environment. Thus far, much attention has been given to the definition of models for agents (e.g., behavior, decision-making and interaction models), while little has been done in defining virtual environments that mimic the complexity of real-world environments.

We define an agent as a software entity that is driven by a set of tendencies in the form of individual objectives and is capable of communicating, collaborating, coordinating and negotiating with other agents. Each agent possesses resources of its own, executes in an environment that is perceived through sensors, possesses skills and can offer services.

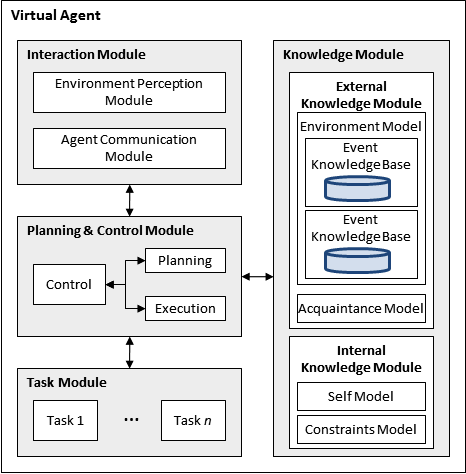

Agent Architecture

Our agent architecture consists of four modules:

The interaction module handles the agent’s interaction with external entities, separating environment interaction from agent interaction. The Environment Perception Module contains various perception modules emulating human-like senses and is responsible for perceiving information about an agent’s environment. The Agent Communication Module provides an interface for agent-to-agent communication.

The knowledge module is partitioned into External Knowledge Module (EKM) and Internal Knowledge Module (IKM). The EKM serves as the portion of the agent’s memory that is dedicated to maintaining knowledge about entities external to the agent, such as acquaintances and objects situated in the environment. The IKM serves as the portion of the agent’s memory that is dedicated for keeping information that the agent knows about itself, including its current state, physical constraints, and social limitations.

The task module manages the specification of the atomic tasks that the agent can perform in the domain in which it is deployed (e.g., walking, carrying, etc.).

The planning and control module serves as the brain of the agent. It uses information provided by the other modules to plan, initiate tasks, make decisions, and achieve goals.

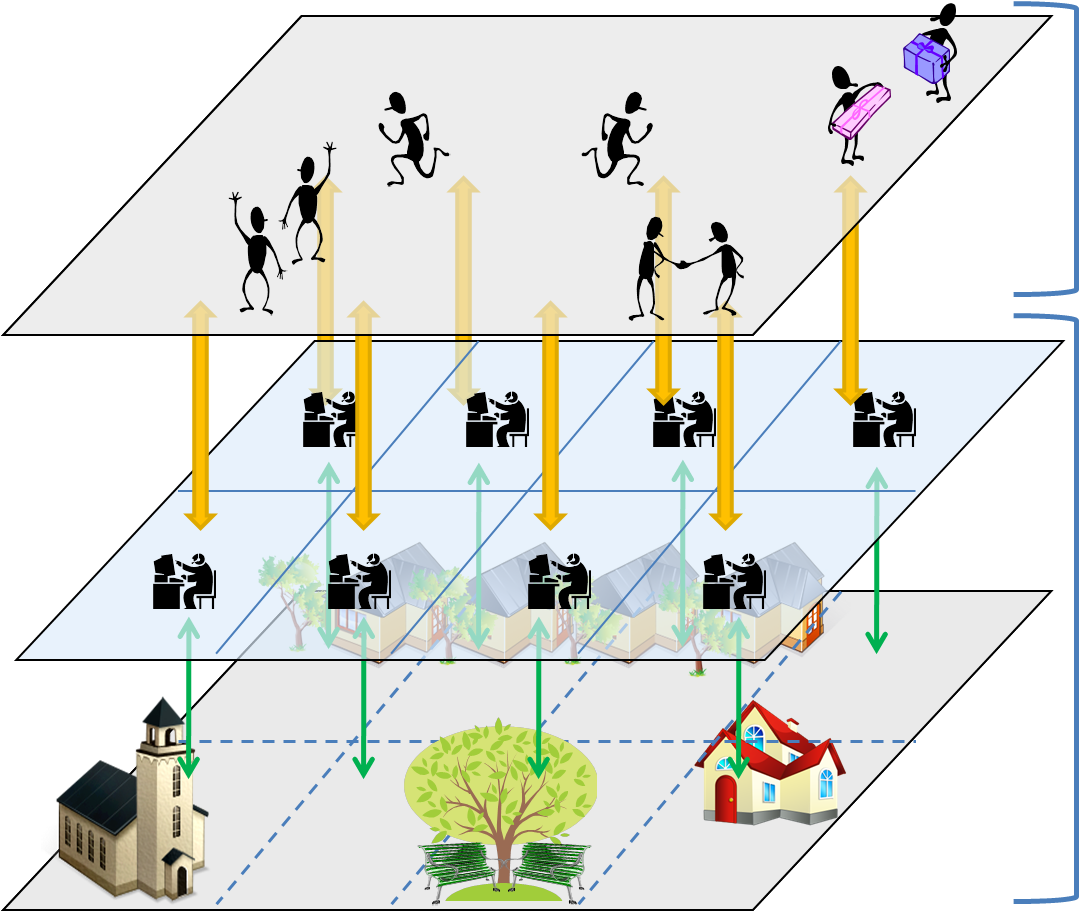

We subscribe to the idea that a virtual environment plays a critical role in a simulation and as such, should be treated as a first-class entity in simulation design. In DIVAs virtual agents and environment are fully decoupled.

For large scale simulations to be meaningful, it is necessary to implement realistic models for virtual environments. This is a non-trivial task since realistic virtual environments (also called open environments) are:

Inaccessible: virtual agents situated in these environments do not have access to global environmental knowledge but perceive their surroundings through sensors (e.g., vision, auditory, olfactory);

Non-deterministic: the effect of an action or event on the environment is not known with certainty in advance;

Dynamic: the environment constantly undergoes changes as a result of agent actions or external events;

Continuous: the environment states are not enumerable.

We achieve the simulation of openness by:

- Structuring the simulated environment model as a set of cells.

- Assigning a special-purpose design agent called cell controller to each cell.

- Managing environmental information about its cell.

- Interacting with its local virtual agents to inform them about changes in their surroundings.

- Communicating with other cell controllers to inform them of the propagation of external events.

A cell controller does not correspond to a real-world concept but is defined for simulation engineering purposes. It is responsible for:

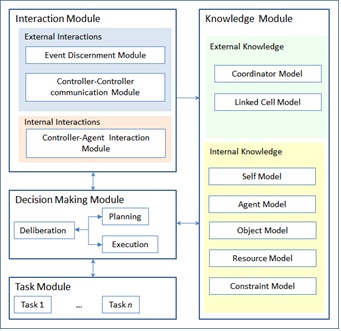

Cell Controller Architecture

The cell controller architecture consists of an interaction module, an information module, a task module, and a planning and control module.

The Interaction Module is used to perceive external events and handles asynchronous communication among controllers as well as synchronous communication between controllers and agents. Controller-to-Agent interaction must be synchronous in order to ensure that agents receive a consistent state of the environment. Since Controller-to-Controller interaction involves high-level self-adaptation and has no bearing on the consistency of the state of the simulated environment, these interactions occur asynchronously.

The Knowledge Module contains the data a controller needs to function. It is composed of external and internal knowledge modules

The Linked Cell Model maintains a list of neighboring cells, that is, those which share a border with the cell. The cell controller uses this information to handle events that occur near boundaries and potentially affect adjacent cells.

The Self Model contains information about the controller (e.g., id) as well as the essential characteristics of the cell assigned to the controller such as its identifier and region boundaries.

The Agent Model contains minimal information, such as identification and location, about the agents within the cell’s environment region.

The Object Model includes information detailing physical entities that are situated within the cell region but are not actual agents (e.g., barricades, buildings, road signs).

The Resource Model contains information about the resources available for the controller.

The Constraint Model defines the specific properties and laws of the environment.

The Task Module manages the specification of the atomic tasks that the controller can perform.

The Planning and Control Module serves as the brain of the controller. It uses information provided by the other modules to plan, initiate tasks and make decisions.

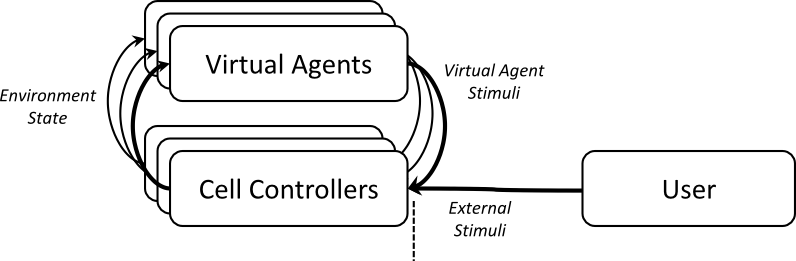

Context-aware agents must be constantly informed about changes in their own state and the state of their surroundings. Similarly, the environment must react to influences imposed upon it by virtual agents and users outside of the simulation.

We have defined the Action-Potential /Result (APR) model for agent-environment interactions. The APR model extends the influence-reaction model to handle open environments, external stimuli, agent perception and influence combination.

This model is driven by continuous time ticks. At every tick, when agents execute actions, they produce stimuli that are synchronously communicated to their cell controller (i.e., the cell controller managing the cell in which the agent is situated). Cell controllers interpret and combine these agent stimuli as well as any external stimuli triggered by the user of the simulation. Once cell controllers determine the total influence of the stimuli, they update the state of their cells. Every cell controller publishes their new state to agents located within their cell boundaries. Upon receiving the updated state of the environment, agents perceive the environment, memorize the perceived information and decide how to act. The cycle repeats when the next tick occurs.

- Rym Zalila-Mili, E. Oladimeji, and Renee Steiner. Architecture of the DIVAs Simulation System. In Proceedings of Agent-Directed Simulation Symposium ADS06, Huntsville, Alabama, April 2006. Society for Modeling and Simulation, Society for Modeling and Simulation.

- Rym Zalila-Mili, Renee Steiner, and E. Oladimeji. DIVAs: Illustrating an Abstract Architecture for Agent-Environment Simulation Systems. Multiagent and Grid Systems. Special issue on Agent-Oriented Software Development Methodologies, 2(4):505{textendash}525, January 2006.

- Renee Steiner, G. Leask, and Rym Mili. An Architecture for MAS Simulation Environments, volume 3830, pages 50{textendash}67. Springer Verlag, 2006.

- R. Steiner, G. Leask, and R. Mili. An Architecture for MAS Simulation Environments. In Proceedings of ACM Conference on Autonomous Agents and Multi Agent Systems, pages 50-67, Utrecht, The Netherlands, July 2005.

- Rym Mili, G. Leask, U. Shakya, and Renee Steiner. Architectural Design of the DIVAs Environment. In Proceedings of Environments for Multi-Agent Systems (E4MAS04), Columbia University, NY, July 2004.

More publications available here